Surfing the LLM Waves: Continuously Benefitting from the Ecosystem Advances

Table of Contents

On September 17, 2024, OpenAI announced o1-preview, which heralded the era of reasoning Large Language Models – which not only auto-regressively generates output tokens, but ponders over them at inference time (via intermediate thinking tokens) to ensure quality. This model enjoys a good performance rating (Artificial Analysis Performance Index: 86), but comes at a high cost (input: $15.00/mt; output: $60.00/mt – where the output tokens also include thinking tokens, and mt is an abbreviation for million tokens). On January 20, 2025, DeepSeek R1 was announced. It delivers an even more impressive performance (Artificial Analysis Performance Index: 89) at ~20-25x lower price (input: $0.75/mt; output: $2.40 on DeepInfra). Shortly thereafter, on January 31, 2025, OpenAI followed suit with o3-mini, which matches the DeepSeek R1 quality (Artificial Analysis Quality Index: 89), but at an intermediate price point (input: $1.1/mt; output: $4.40/mt).

If you are an application developer who benefits from the reasoning capability, should you migrate from o1-preview to DeepSeek R1, and then again to o3-mini? In an intensely competitive field such as frontier LLM, such potentially disruptive events tend to occur frequently – e.g., when a new frontier LLM arrives; when a provider, such as Groq, is able to slash the cost of access. In future as fine-tuning becomes commoditised, we surmise such events will occur even more frequently. Irrespective of these events that cause a step-jump, the quality and price of every provider or LLM change with time (a phenomenon christened LLMflation by a16z: for the same performance, LLM inference cost gets 10x cheaper every year). This begs the question: must we migrate continuously?

The question of migration is even more nuanced. Two frontier LLMs with equivalent overall performance may perform differently on different tasks: e.g., while both o3-mini and DeepSeek R1 enjoy an equal Artificial Analysis Quality Index of 89, on quantitate reasoning task (MATH-500 benchmark), DeepSeek R1 fetches 97%, whereas o3-mini fetches 92%. This makes the migration decision further contingent on the nature of the application.

An application developer, thus, needs a mechanism for continuous migration – which enables her to decouple the choice of the provider/LLM from the application logic.

As an aside, in the world of finance, a trader would need to re-allocate her portfolio in response to (predicted) movements in the asset prices. A quantitative trader offloads this task to an algorithm.

At Divyam, we believe that an algorithmic, continuous, fractional migration is feasible – where the migration decision is offloaded to a router at a per prompt granularity.

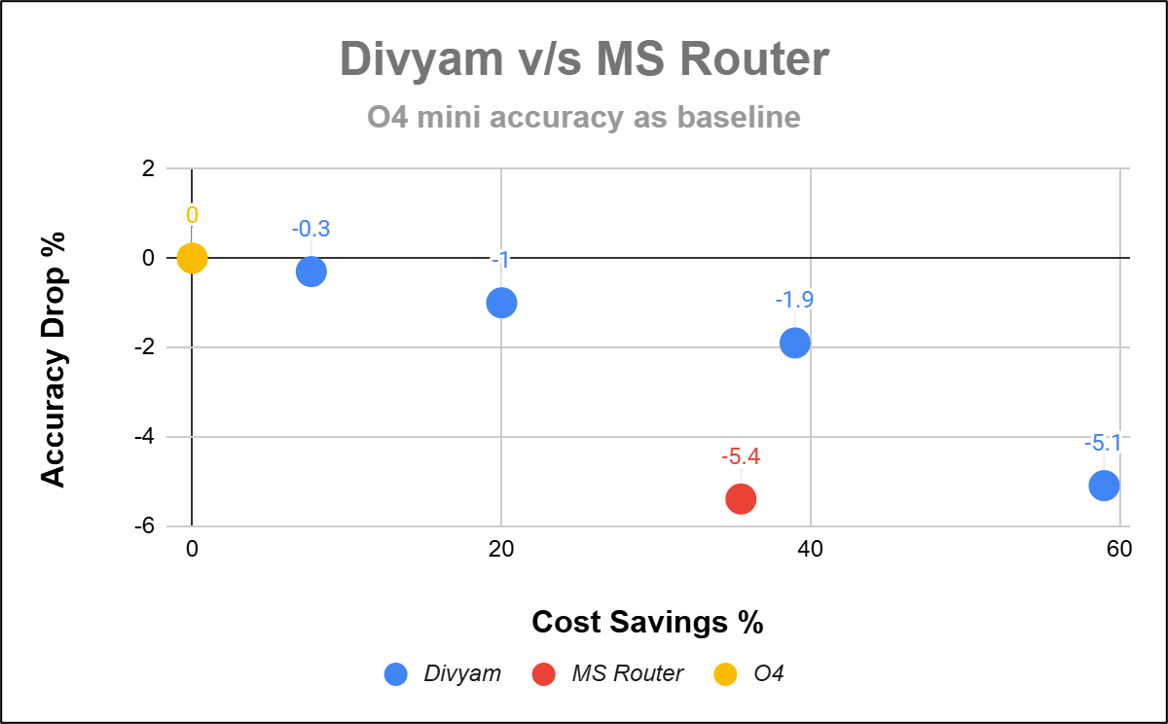

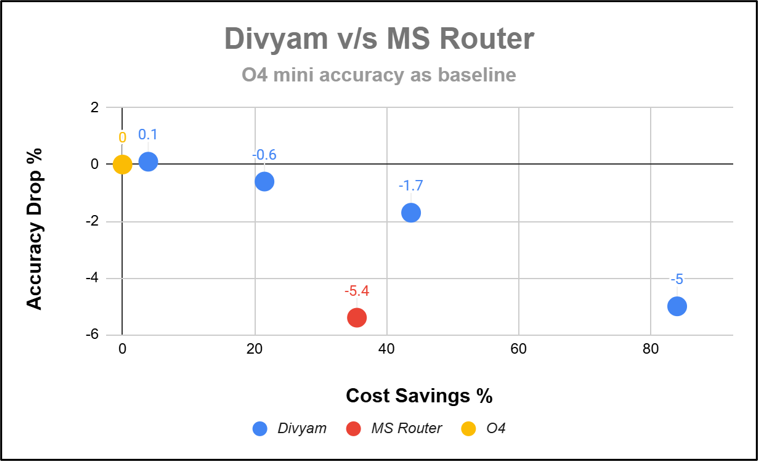

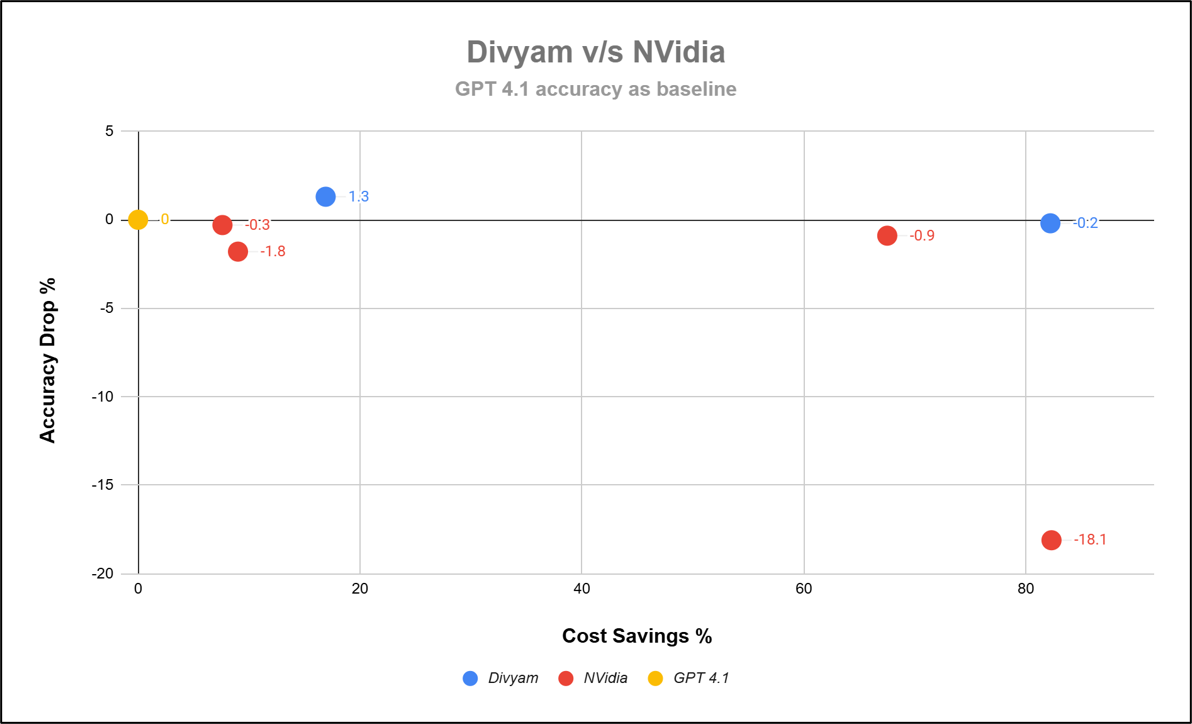

To study the efficacy of routers, we conducted an experiment at Divyam. Specifically, we took the MT-Bench dataset, which contains 80 two-turn conversations between a human expert and a LLM. With Divyam’s evaluation harness, we replayed these conversations to both o1-preview and DeepSeek-R1-Distill-Llama-70B (input cost: $0.23/mt; output cost: $0.69 on DeepInfra; almost equal performance as o1-preview in MATH-500 and HumanEval), and used o1-preview to judge the resulting responses. The prompt template for the judge follows the best practices listed in the landmark Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena paper (note that we allow the application developer to plug in her own eval, instead). The result shows that 48 out of the 80 conversations (60%) elicit an equivalent or a better response if we choose to use the cheaper alternative in place of o1-preview – which amounts to slashing a $100 bill to $42.4 (~2.4x reduction) – at the expense of slight reduction in quality on half the conversations (note that this is a function of willingness-to-pay, a knob that the application developer can tune to suit her appetite for tradeoff). We present a visual summary below:-

This, however, is only an upper-bound. To operationalise this insight, one needs to actually build a router. While a detailed discussion of routing algorithms is deferred to a later blogpost, we illustrate the intuition behind a conceptually simple routing algorithm: k-Nearest Neighbour (kNN). kNN builds an “atlas” of all the prompts in the application log, and remembers the quality that each LLM yielded on them. Presented with a new prompt, kNN simply places it on that atlas, looks up k nearest neighbours around it, and chooses to route to that LLM which yielded the highest average quality in this neighbourhood. The following figure (left panel) visualises the atlas of the MT-Bench. This atlas was obtained by first embedding each prompt into a 384-dimensional space with the “all-MiniLM-L12-v2” Sentence Transformer, and then subsequently projecting them into the plane with t-SNE – a dimension-reduction algorithm – and, lastly, by colouring each conversation according to the most performant LLM for it. The right panel segments it as per the routing decision: i.e., if a prompt maps to a red region, the kNN router, when k=3, routes it to DeepSeek; else, if it falls into the green region, it goes to o1-preview.

At Divyam, we built an agentic workflow, where agents specialising in evaluation, routing, etc.collaborate to facilitate continuous and fractional (i.e., per prompt) migration – allowing the application developer to direct 100% focus on application development, devoid of any distraction posed by migration.This workflow requires a low-touch integration with the application, and can be deployed on the client’s infrastructure.

Explore More AI Insights

Stay ahead of the curve with our latest blogs on AI innovations, industry trends, and business transformations. Discover how intelligent systems are shaping the future—one breakthrough at a time.

Cut costs. Boost accuracy. Stay secure.

Smarter enterprise workflows start with Divyam.ai.