AI Strategy focused on maximizing returns on your GenAI investments

Table of Contents

As industries across the spectrum continue to be sculpted by Gen AI, embracing it with strategic, ethical, and operational foresight will determine the extent to which businesses can truly harness its transformative potential to craft a future that resonates with success, sustainability, and societal contributions. However, GenAI faces its fair share of adoption hurdles. Organizations committed to leveraging generative AI must navigate through myriad challenges, ensuring both the solution efficacy and ethical application.

Let us take the challenges of

Ensuring Adaptability and Scalability – given the scores of choices of GenAI products in the ever-evolving market today, an organization is continually wondering whether they have the right product chosen. The problem of vendor-lock in looms large as the costs of adapting and scaling are formidable. Your choice of LLM for your applications depends on the crucial balance of cost vs benefits. But this factor is not static – it needs continuous evaluation and evolution given the fluidity of GenAI advancements.

Accuracy and hallucinations – Your organization has GenAI based solutions, but you are continually concerned about the quality of the output you get. There are techniques to mitigate the tendency for AI models to hallucinate, such as by cross-checking the results of one AI model against another, which can bring the rate to under 1%. The lengths one will go to mitigate hallucinations is largely dependent on the actual use case, but it’s something that you need AI developers to continually work on.

The above two challenges are compounded by the fact that your organization’s AI skill set not only needs to be continually upgraded, but your skilled workforce must also be involved in experimenting and training your application with new entrants in the Gen AI market to ensure that you are not losing out on the latest benefits that they have to offer. This affects your own time to market and cost of production.

What if there was a solution which chose the best LLMs for you , fine-tuned them for your application, continuously evaluated the quality of your output,provided excellent observability across all your GenAI use cases, all this ensuring the best optimized cost benefit ratio always?

Divyam.AI is the solution you are looking for.

If you are an organization who has established its inference pipelines using GenAI, Divyam can help you upgrade to the best model suited for your application. It is a solution where the best model for your use case is made accessible to you through a universal API which follows the OpenAI API standards. Divyam’s model selector takes each prompt of your application through the API, works its magic and directs it to the best LLM model for that prompt.

It is unique because you have a leader board of models to choose from at a per prompt granularity. In the absence of Divyam, you would have needed to employ data scientists who would experiment and choose the best model for your application. Moreover, choosing the best model at a per prompt granularity is a hard problem to solve, you would rather have this problem solved for you by a plug and play solution like Divyam. The LLM chosen by Divyam’s fabric could be API based models like ChatGPT or Gemini or models like Llama which could be self hosted in your inference server.

If you have been running your application through Divyam you would also not worry about fine tuning your inference server. Divyam’s fine tuner takes that headache off you. The fine tuner has intelligence built which chooses the right values of parameters to tune which are suited for your application patterns and uploads the fine tuned model back to your inference server. This will continuously give your user an evolved experience and the best performance of your inference server model.

In cases where Divyam has chosen API based LLMs for your application, and you are wondering if you are still at your peak Cost benefit ratio, Divyam’s evaluation engine has you covered . The evaluator runs in the background and continuously does AB testing with earlier cheaper versions of the LLM models so that you always have an almost equal or greater artificial intelligence analysis performance index for your application.

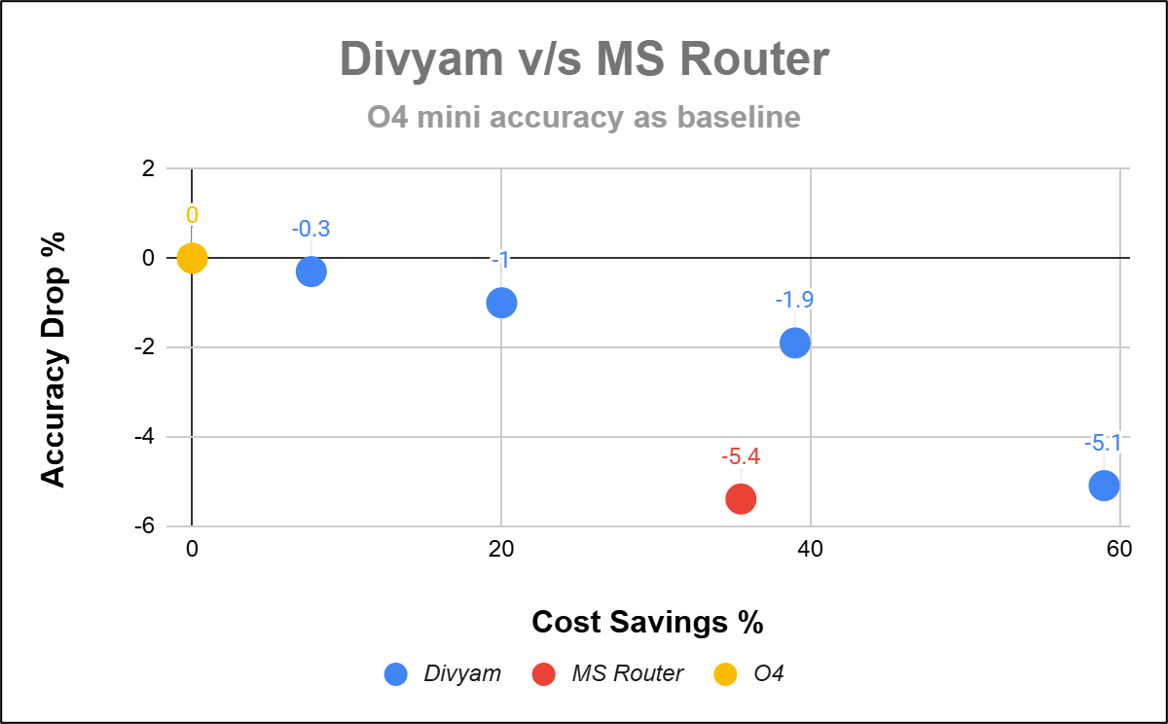

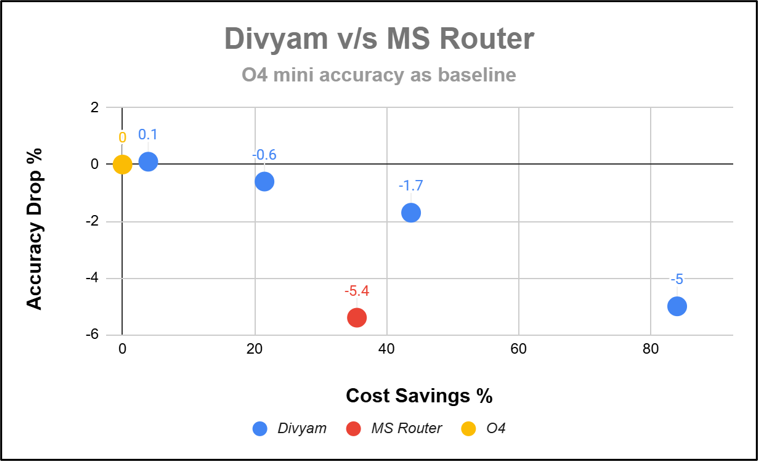

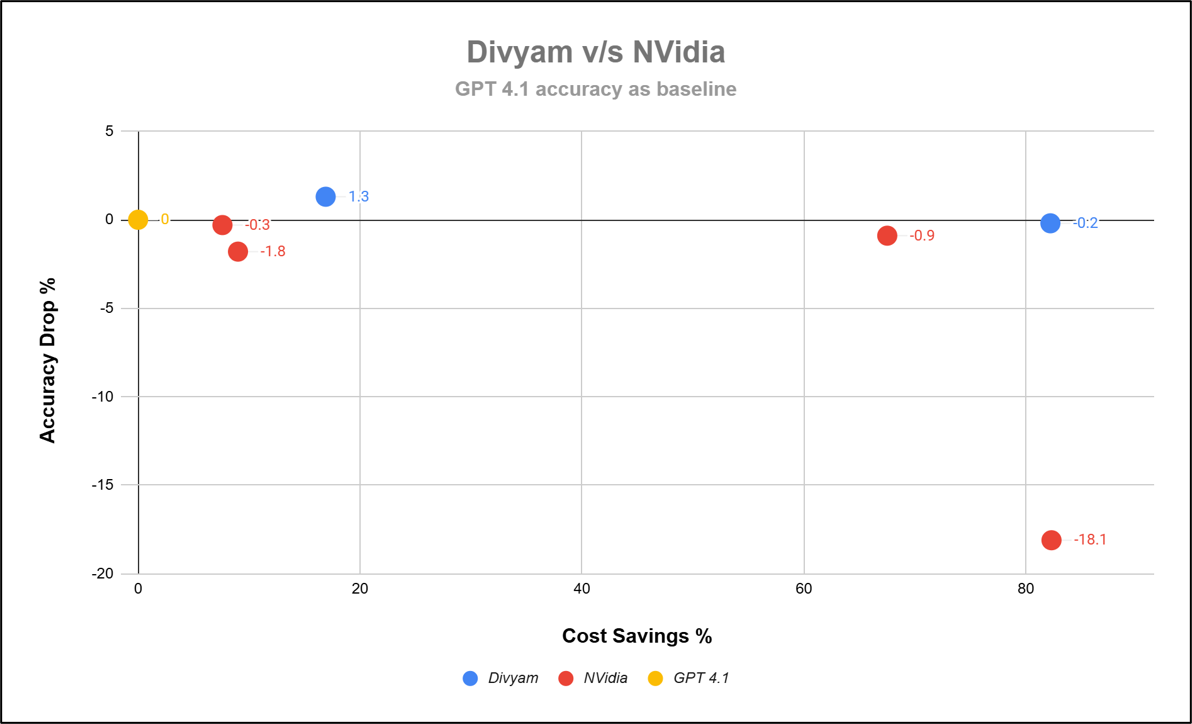

The cost-quality trade-offs of LLM usage are application specific. Different applications have different tolerance and often an organization wants to make a choice between reach vs quality. Divyam.AI provides you with a slider per application, which you can configure to have the desired balance. You can also observe the cost benefits and quality metric improvement on our rich dashboards and compare the performance. This can make all the difference between a positive RoI vs a negative RoI for the application.

Let us come to the inevitable question of data privacy. Your organization’s data remains safe within your VPCs. Divyam is deployed within your enterprise boundaries, so your data stays put. Divyam only has control plane access to push in the latest and greatest intelligence and monitor quality so that you are always at peak performance.

Divyam.AI is also available as a hosted solution in case you want to get started with a single line of code change.

In conclusion, Divyam.AI learns from both its global expertise and your own historical data to build a private, use-case-specific knowledge base that trims away hundreds of irrelevant model choices, automatically selects the best one, and continuously monitors live quality. If performance ever dips, it intervenes instantly to protect production, and it reruns the whole process on schedule or the moment a new model emerges. All of this happens without manual effort, so your team can stay focused on delivering core value instead of chasing model upgrades or cost savings.

Explore More AI Insights

Stay ahead of the curve with our latest blogs on AI innovations, industry trends, and business transformations. Discover how intelligent systems are shaping the future—one breakthrough at a time.

Cut costs. Boost accuracy. Stay secure.

Smarter enterprise workflows start with Divyam.ai.